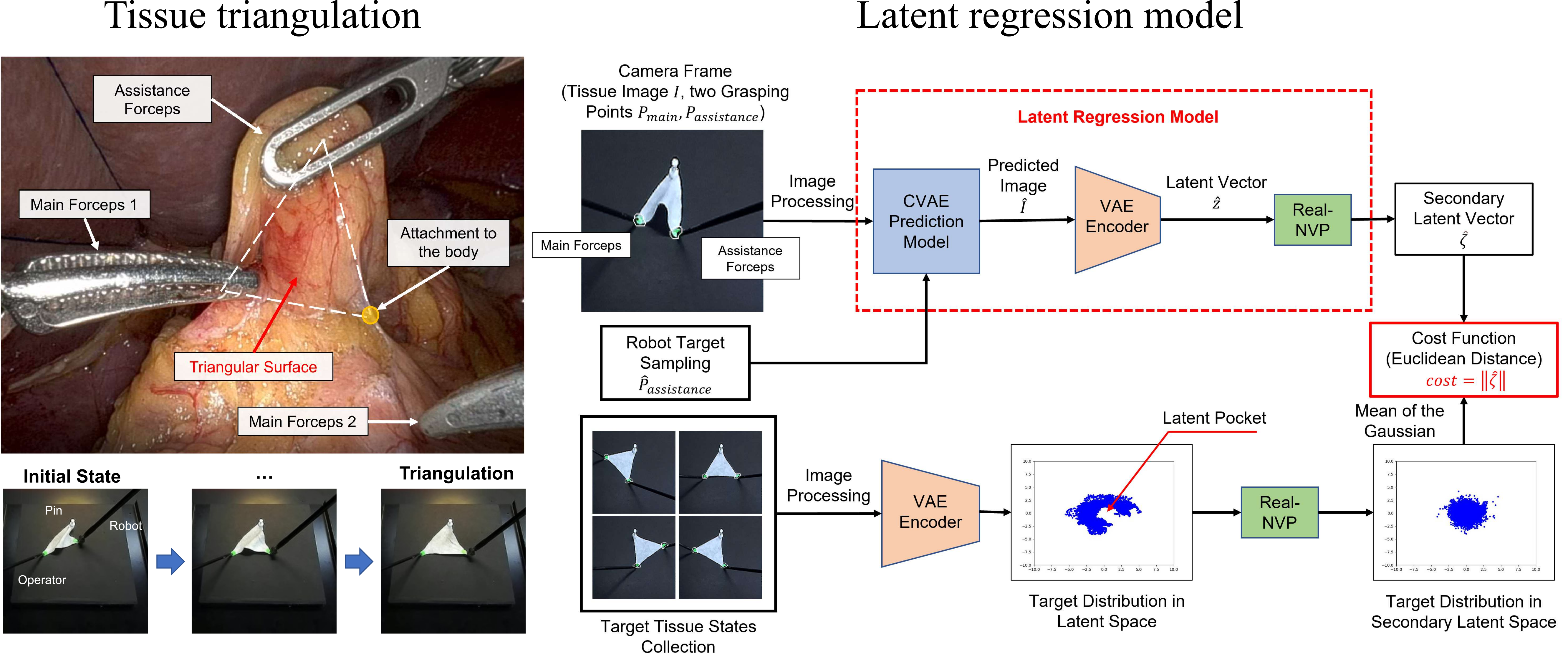

Autonomous tissue manipulation

We are exploring latent regression models for autonomous tissue triangulation in robot-assisted surgery. The proposed models can predict the tissue deformation and select optimal robot actions using a cross-entropy based search in the latent space. We are also exploring real-to-sim techniques for training in simulation with reinforcement learning methods and transferring to the real-world.

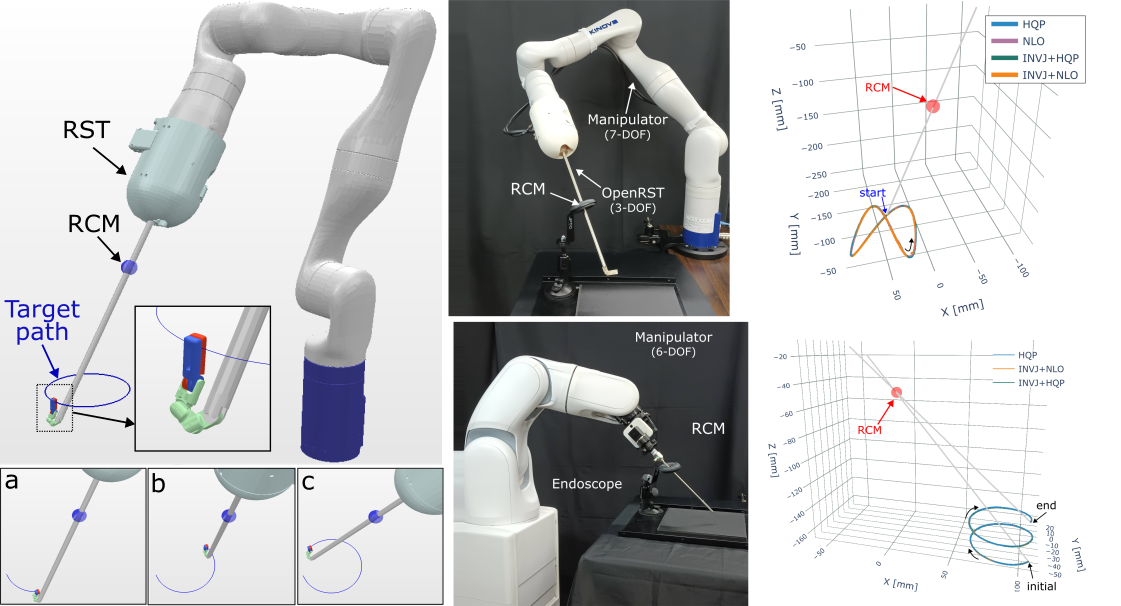

Constrained Motion Planning for Surgical Robots

We are developing real-time multi-objective concurrent inverse kinematics (IK) solvers for constrained motion planning in surgical robotics. The solvers can handle multiple constraints such as joint limits, RCM, manipulability, collision avoidance, and task constraints.

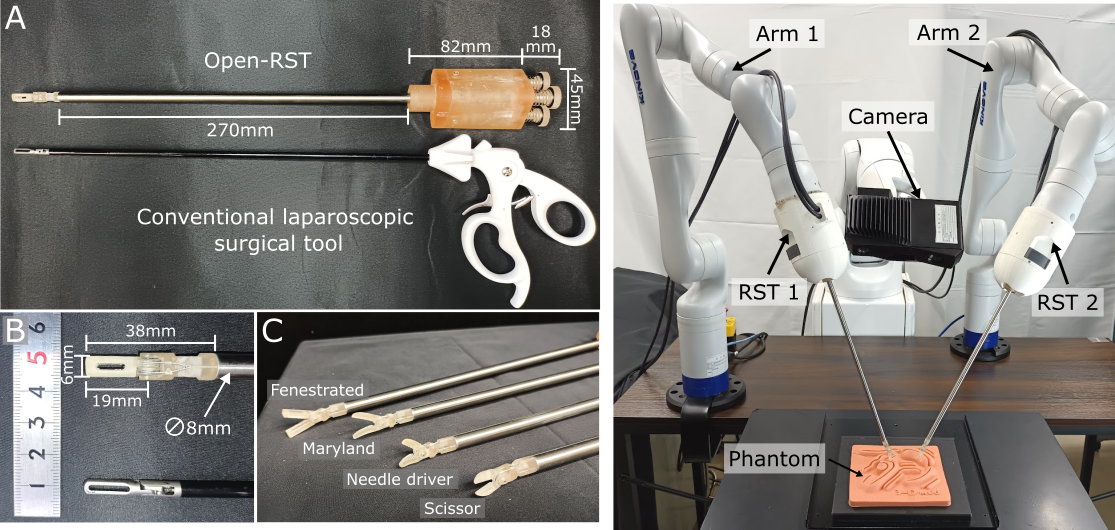

Development of biocompatible 3D-printed surgical tools

We are developing and evaluating biocompatible 3D-printed surgical tools that employ cable-driven mechanisms for minimally invasive surgery. The tools offer multi-DOF functionalities and optimized design for decoupling motion and force.

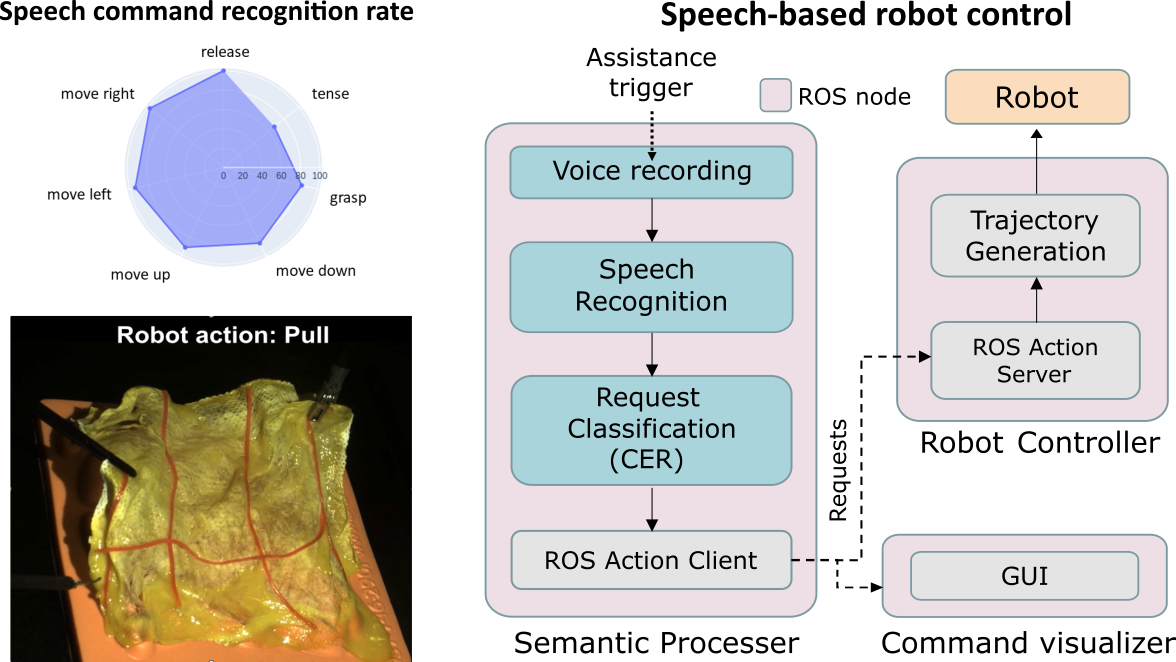

Speech-based robot control for interactive collaboration

Speech recognition and action execution for interactive robot collaboration. The project aims to develop a voice-based interface that allows humans to communicate with a robot assistant through natural language commands.

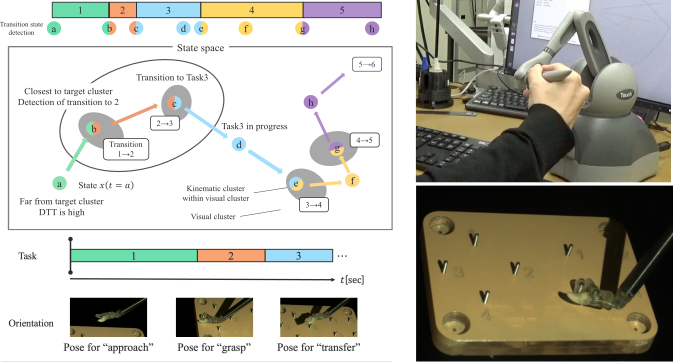

Online surgical action recognition for autonomous robotic assistance

We are exploring how online surgical action recognition can activate predefined robot assistance during surgical tasks. The project proposes a hierarchical framework that first clusters the visual features of the surgical scene and then classifies the proprioceptive features of the robot arm to distinguish between different surgical actions

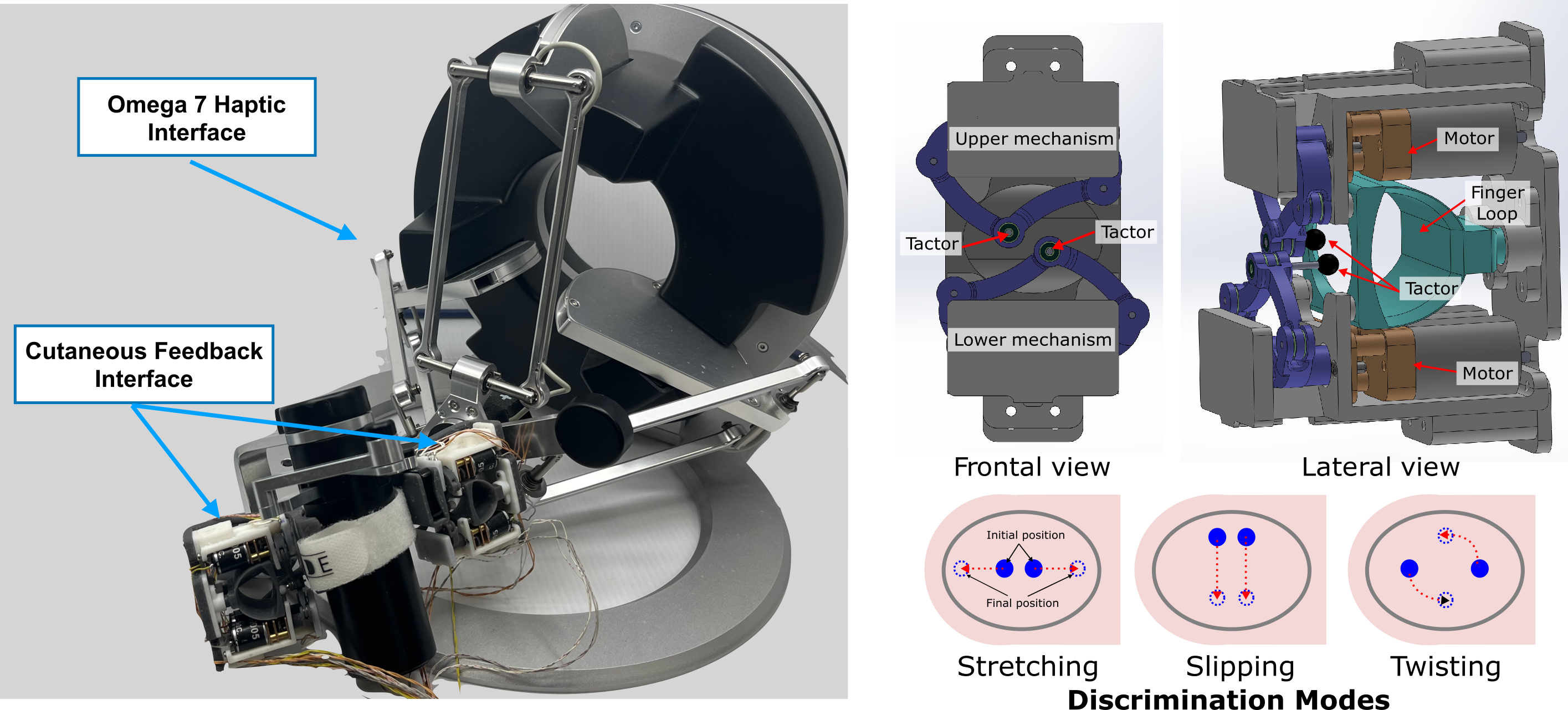

Development of Haptic devices for teleoperation control of surgical robots

We are developing novel haptic devices (kinesthetic/tactile) to emulate the sense of touch during teleoperation of surgical robots. The project also incorporates virtual reality environments to deliver immersive and realistic feedback and control for the operator.